Associate Teaching Professor of Linguistics at UC San Diego

Director of UCSD's Computational Social Science Program

Curriculum Vitae and Publications

Here’s my latest Curriculum Vitae, with links to all PDF versions and captured lectures. You can also download this document as a PDF.

Basic Information

- IPA: [wɪl̴ ˈstaɪlə˞]

- Email: wstyler@ucsd.edu

- Homepage: http://savethevowels.org

- Office: Applied Physics and Mathematics Building (AP&M) 4151

- Mailing Address: Department of Linguistics

University of California San Diego

9500 Gilman Drive #0108

La Jolla, CA, 92093-0108 - This CV was last updated on January 11th, 2024.

Current Positions

- Associate Teaching Professor of Linguistics at UC San Diego (‘LSOE’) (July 2023 - Present)

- Director of the Computational Social Science (CSS) Program at UC San Diego (June 2022 - Present)

Past Positions

- Assistant Teaching Professor of Linguistics (LPSOE) at UC San Diego (July 2018 - July 2023)

- Post-Doctoral Research Fellow at the University of Michigan Department of Linguistics (2015-2018)

- Graduate Researcher and Annotation Supervisor at

the University of Colorado at Boulder Department of Linguistics

(2008-2015)

- Please see ‘Past Research Positions’ below for details on my Post-Doctoral and Graduate Research Positions

- Lead Graduate Teacher, CU Boulder Department of Linguistics (2012-2014)

Education

- Ph.D. in Linguistics, University of Colorado at

Boulder, 2008-2015.

- Thesis Title: On the Acoustical and Perceptual Features of Vowel Nasality (Defended March 2015) - Download as a PDF

- *See ‘Doctoral Dissertation’ Section below for more details on the dissertation

- Concurrent B.A/M.A. in Linguistics, University of

Colorado at Boulder, Winter 2008.

- M.A. Thesis: Establishing the nature of context in speaker vowel space normalization - Download as a PDF

- B.A with Distinction, maintaining a 3.75 or greater overall GPA

Research Interests

Acoustic and Articulatory Phonetics: Speech perception, with an emphasis on variability, normalization, and its role in our perception; The use of machine learning and computational modeling to simulate, predict and explore the human process of speech perception and categorization; perceptual cues and features for vowel perception and vowel nasality; Modeling articulation using Electromagnetic Articulography (EMA); experimental technology in phonetics; quantitative and statistical analysis in phonetics.

Natural Language Processing: Computational Speech Processing; Automatic Speech Recognition; Text-to-Speech Synthesis; Dialect adaptation and Sociolinguistic Variation in Language Models; Processing language and idioms in medicine and medical records; event extraction and temporal reasoning; the role of inference and domain knowledge in natural language processing.

Pedagogy and Course Design: Innovative methods for teaching and empowering new teachers; developing online and technologically-mediated tools for enhancing student learning; Trauma-informed teaching and mentorship practices; designing equitable systems for student evaluation; free and open course materials; the use of open-source software in linguistics education

Honors and Awards

Received the UC San Diego 2022 Legacy Lecture Award, based on a campus-wide popular vote where students ‘choose their favorite professors’, where I was the most-voted-for professor among the 150+ nominees and three finalists. This led to my presenting the UCSD 2022 Legacy Lecture on May 6th, 2022. - View a Full Recording of the Talk with Slides

Received UCSD Linguistics Graduate student ‘Recognition of Faculty Excellence’ award for AY2021-2022, in recognition of my efforts in teaching, mentorship, and student support

Received the University of Colorado Graduate School’s 2012-2013 Graduate Student Teaching Excellence Award, based on student evaluations, recommendations, and observation in the classroom.

Publications

Below are my current publications. To see my citations in other people’s work, visit my Google Scholar Page.

Peer-Reviewed

A. Coetzee, P.S. Beddor, W. Styler, S. Tobin, I. Bekker, D. Wissing. Producing and perceiving socially structured coarticulation: Coarticulatory nasalization in Afrikaans. Laboratory Phonology. 13(1):13. 2022. https://doi.org/10.16995/labphon.6450 - Download as a PDF

J. Krivokapic, W. Styler, D. Byrd. The role of speech planning in the articulation of pauses. Journal of the Acoustical Society of America. 151(1):402. 2022. https://asa.scitation.org/doi/10.1121/10.0009279 - Download as a PDF

J. Krivokapic, W. Styler, B. Parrell. Pause postures: The relationship between articulation and cognitive processes during pauses. Journal of Phonetics Num. 79, 2020. - https://doi.org/10.1016/j.wocn.2019.100953

- This paper was submitted to the Journal of Phonetics, an Elsevier journal, before the UC Boycott and my subsequent ban. Please do not pay for access to this work. Please feel free to email me for a PDF copy, or feel free to use alternative academic search engines to locate a copy for yourself.

A. Coetzee, P.S. Beddor, W. Styler, S. Tobin, I. Bekker, D. Wissing. Producing and perceiving socially indexed coarticulation in Afrikaans. In Proceedings of the 19th International Congress of Phonetic Sciences, Melbourne, August 2019. - Download as a PDF

P.S. Beddor, A. Coetzee, W. Styler K. McGowan, J. Boland. The time course of individuals’ perception of coarticulatory information is linked to their production: implications for sound change. Language, Vol. 4, Num. 94. 2018. - Download as a PDF

A. Coetzee, P.S. Beddor, K. Shedden, W. Styler, Daan Wissing. Plosive voicing in Afrikaans: Differential cue weighting and tonogenesis. Journal of Phonetics, 66:185 - 216. 2018. - Download as a PDF file

W. Styler. On the Acoustical Features of Vowel Nasality in English and French. Journal of the Acoustical Society of America. 142(4):2469-2482. Oct. 2017. http://asa.scitation.org/doi/10.1121/1.5008854 - Download as a PDF file

G. Savova, S. Pradhan, M. Palmer, W. Styler, W. Chapman, and N. Elhadad. Annotating the Clinical Text MiPACQ ShARe SHARPn and THYME Corpora. In Handbook of Linguistic Annotations. Ed. James Pustejovsky and Nancy Ide. Springer. 2017.

- PDF Unavailable, sorry about that. Here’s a link to purchase the book

R. Scarborough, W. Styler, and L. Marques. Coarticulation and contrast: Neighborhood density conditioned phonetic variation in French. In Proceedings of the 18th International Congress of Phonetic Sciences, Glasgow, Aug. 2015. - Download as a PDF file

W. Styler, S. Bethard, S. Finan, M. Palmer, S. Pradhan, P. C. De Groen, B. Erickson, T. Miller, C. Lin, G. K. Savova, and J. Pustejovsky. Temporal annotation in the clinical domain. Transactions of the Association of Computational Linguistics, 2, 2014. - Download as a PDF file

R. Ikuta, W. Styler, M. Hamang, T. O’Gorman, and M. Palmer. Challenges of adding causation to Richer Event Descriptions. In Proceedings of the 2014 ACL EVENT Workshop. Association for Computational Linguistics, June 2014. - Download as a PDF file

W.-T. Chen and W. Styler. Anafora: A web-based general purpose annotation tool. In Proceedings of the 2013 NAACL HLT Demonstration Session, pages 14-19, Atlanta, Georgia, June 2013. Association for Computational Linguistics. - Download as a PDF file

D. Albright, A. Lanfranchi, A. Fredriksen, W. Styler, C. Warner, J. D. Hwang, J. D. Choi, D. Dligach, R. D. Nielsen, J. Martin, W. Ward, M. Palmer, and G. K. Savova. Towards comprehensive syntactic and semantic annotations of the clinical narrative. Journal of the American Medical Informatics Association, December 2012. - Download as a PDF file

R. Scarborough, W. Styler, and G. Zellou. Nasal Coarticulation in Lexical Perception: The Role of Neighborhood-Conditioned Variation. In Proceedings of the 17th International Congress of Phonetic Sciences, pages 1-4, Hong Kong, Aug. 2011. - Download as a PDF file

G. K. Savova, S. Bethard, W. Styler, J. Martin, and M. Palmer. Towards temporal relation discovery from the clinical narrative. In AMIA Annual Symposium Proceedings, page 445. AMIA, 2009. - Download as a PDF file

Non-Peer-Reviewed

W. Styler. A Guide to Online Teaching in the Social Sciences. Published in Spring 2020 as a resource for remote teaching, and continuously maintained at http://savethevowels.org/online

J. Zhu, W. Styler, I. Calloway. A CNN-based tool for automatic tongue contour tracking in ultrasound images. (Submitted, approved, and withdrawn due to inability to attend at Interspeech, 2019) - Download as a PDF

W. Styler. Using Unix for Linguistic Research. Published in December 2019 as a text for the corpus analysis portion of LIGN 6 (‘Language and Computers’ at UC San Diego, and continuously maintained at http://savethevowels.org/unix/.

W. Styler. Using Praat for Linguistic Research. Published in July 2011 for the 2011 LSA Linguistic Institute’s Praat Workshop, and continuously maintained at http://savethevowels.org/praat/.

Satire and Fiction

W. Styler. Metalinguistically Hardened Caesar-shift Encryption. The snakeoil.cr.yp.to Snake Oil Cryptography Competition. Submitted August 2015. - Download as a PDF file

W. Styler. Speech is an Elaborate Cover for Widespread Telepathy. Speculative Grammarian (A Satirical Linguistics Journal), Vol. CLXX, No. 4, August 2014. http://specgram.com/CLXX.4/09.styler.telepathy.html

W. Styler. In the Shadows of Hemera. Jupiter Science Fiction Magazine’s XXVI ‘Isonoe’ Issue. October 2009.

Conference Presentations and Posters

See my posters page for full-size PDFs of all posters I’ve presented at conferences.

J. Krivokapic, W. Styler, S. Ananthakrishnan (2022). Individual differences in boundary perception. An invited talk presented at Speech Prosody 2022 in Lisbon, Portugal during the ‘Gradience in Intonation’ Workshop. (May 27th, 2022)

J. Krivokapic, W. Styler, D. Byrd (2020). The role of speech planning in the articulation of pause postures. A poster presented at the 12th International Seminar on Speech Production, hosted by Yale and held online due to the COVID-19 Pandemic (December 14th-18th) - Download Poster as a PDF

J. Zhu, W. Styler, I. Calloway (2018). Automatic Tongue Contour Extraction in Ultrasound Images with Convolutional Neural Networks. A poster presented at the Meeting of the Acoustical Society of America in Minneapolis, Minnesota (May 7th-11th) - Download Poster as a PDF

W. Styler, J. Krivokapic, B. Parrell, J. Kim (2017). Using Machine Learning to Identify Articulatory Gestures in Time Course Data. A poster presented at the Meeting of the Acoustical Society of America in New Orleans, Louisiana (December 4th-8th) - Download Poster as a PDF

J. Krivokapic, W. Styler, B. Parrell, J. Kim (2017). Pause Postures in American English. A poster presented at the Meeting of the Acoustical Society of America in New Orleans, Louisana (December 4th-8th)

W. Styler (2017). Modeling human speech perception using machine learning. A talk presented at the Linguistic Society of America 2017 meeting in Austin, Texas (January 6th)

W. Styler (2016). Normalizing Nasality? Across-Speaker Variation in Acoustical Nasality Measures. A poster presented at the Meeting of the Acoustical Society of America in Salt Lake City, Utah (May 23rd-27th) - Download Poster as a PDF

W. Styler, R. Scarborough (2014). Surveying the Nasal Peak: A1 and P0 in nasal and nasalized vowels. A poster presented at the Meeting of the Acoustical Society of America in Indianapolis, Indiana (October 26th-29th) - Download Poster as a PDF

W. Styler, R. Scarborough, G. Zellou (2011). Use of stimulus mixing to synthesize a continuum of nasality in natural speech. A poster presented at the 162nd Meeting of the Acoustical Society of America in San Diego, California (October 31st-November 4th) - Download Poster as a PDF

R. Scarborough, G. Zellou, W. Styler (2011). Nasal Coarticulation in Lexical Perception: testing the role of neighborhood-conditioned variation. A poster presented at the LSA Summer Institute, 2011 - Download Poster as a PDF

Invited Talks

W. Styler (2023). How to Make Writing Suck Less. Guest talk in Computational Social Sciences Colloqium Series (May 16th).

W. Styler (2023). Coping with and supporting students through trauma. Guest talk in Cognitive Science 200 (‘Academics after Adversity’) (October 21st).

W. Styler and A. Sculimbrene (2022). Lost in Translation: Miranda Rights and Language. Presented at the New Hampshire Association of Criminal Defense Lawyers’ Fall 2022 Continuing Legal Education Conference in Manchester, NH. (October 21st).

W. Styler (2022). Language is Incredible. UC San Diego’s 2022 ‘Legacy Lecture’ Presentation, selected by student vote. (May 6th). https://savethevowels.org/legacy

W. Styler (2021). Linguistic Problems with Statistic Solutions. Invited Colloquium talk for the UC Riverside Department of Statistics. (October 12th).

W. Styler (2020). Specialized skills and tools for remote linguistics instruction. Invited talk for the Linguistics Faculty at the University of Michigan. (July 20th).

W. Styler (2019). Using Transparent Machine Learning to Study Human Speech. Invited Colloqium talk at UCLA Department of Linguistics. (November 15th).

W. Styler (2019). Machine Learning as a tool in Speech Research. Invited Colloqium talk at San Diego State University Department of Linguistics. (March 8th).

W. Styler (2017). Ask An Algorithm: Using Machine Learning to study Human Speech. Invited Colloqium talk at Michigan State University in East Lansing, MI. (September 28th).

W. Styler (2017). A Nose by Any Other Wave: Studying the Perception of Vowel Nasality with Humans and Machines. Presented as the Paul Efron Distinguished Lecturer at Pomona College (March 24th).

W. Styler (2016). On the Acoustical and Perceptual Features of Vowel Nasality. Presented at the Northwestern University ‘Phonatics’ Colloquium (April 27th).

Teaching Experience

Instructor, as Teaching Faculty at UC San Diego

Legend: F=Fall Quarter, W=Winter Quarter, S=Spring Quarter, AY=All three quarters in the given Academic Year, with the number being the 20__ year.

- LIGN 42 - Linguistics of the Internet (W21, W22,

W24)

- A course I created and first taught, this course looks at the intersection of the idea of ‘memes’, their growing online popularity, their many analogies to other forms of human language, and the utility of linguistic analyses and ideas in describing their meaning, functions, and use.

- First taught as LIGN 47, a Freshman seminar, then as ‘Linguistics of Memes’, in Winter 2021, and revised to ‘Linguistics of the Internet’ with a slightly broader focus on all linguistic phenomena of the terminally online in Winter of 2024

- CSS 209 - CSS Research Colloquium (AY23-24)

- A graduate seminar for Computational Social Science with a combination of dedicated talks for the CSS audience, co-located talks in Social Science departments, professionalization talks within CSS, and group discussions among CSS Ph.D and M.S. students.

- LIGN 101 - Introduction to Linguistics (F18, S19,

W20, S20, AY20-21, AY21-22, AY22-23, F23, W24)

- An undergraduate introduction to Linguistics, focusing on providing an overview of phonetics, phonology, morphology, syntax, semantics, and pragmatics, while inspiring students to move deeper into the field.

- LIGN 500 - Linguistics Instructional Assistant

Training (F18, F19, F20, F21, F23)

- A course I created, LIGN 500 is designed to complement campus-level TA training by introducing important situational, legal, field-specific, and pedagogical information in service of helping graduate students be the best TAs they can be. Includes an initial two-day intensive covering theory and classroom management, and weekly sessions on topics in teaching through the quarter.

- Computational Social Sciences M.S. Bootcamp (as

Co-Instructor) (Summer 2023)

- Planned and taught one week of the CSS Bootcamp, focused on introductory computer science topics (e.g. computing fundamentals, networking, development, operating systems) as well as presentation and documentation design (with student microteaching).

- LIGN 113 - Hearing Science and Hearing Disorders

(S20, W23)

- A course I created and first taught, LIGN 113 provides students with an introduction to the anatomy and physiology of hearing, along with a clinical perspective on audiological testing and the nature of acquired hearing disorders.

- LIGN 287 - Designing a Linguistics Course (F22)

- A collaborative graduate workshop focused on course design and the unique difficulties posed by designing linguistics coursework, assignments, and evaluations.

- CSS 201S and 202S - Computational Social Sciences M.S.

Bootcamp (Summer 2022)

- Served as Director and Coordinator of the CSS Bootcamp courses for the first year of the M.S. program for Summer 2022. This included scheduling co-teaching faculty and guest talks, basic curriculum guidance, student affairs coordination, as well as approximately 3 weeks of teaching course sessions myself on topics ranging from computing, unix, networking, audio, acoustics, teaching, and more.

- LIGN 6 - Language and Computers (W19, W20, W21,

S22)

- A course I created and first taught, LIGN 6 is designed to provide an accessible introduction to computational linguistics and natural language processing, using voice-based virtual assistants (e.g. Siri) design as the core ‘task’ and analyzing each required level of linguistic understanding and analysis.

- LIGN 214 - Speech Perception (F19)

- A graduate seminar focused on the human perception of speech from a theoretical and experimental perspective.

- LIGN 120 - Morphology (S19)

- An undergraduate introduction to morphology, focusing both on the analysis of morphological data, and on some of the interesting theoretical issues of the lexicon and of the treatment of morphological variation.

- LIGN 111 - Phonology (W19)

- An undergraduate introduction to phonology, focusing on generative ordered-rule based approach to phonology, heavily focused on data analysis.

Instructor for Independent Study

- Winston Durand - Educational Technology and

Development (F20)

- This independent study focused on the goals, nature, development, and constraints on Educational Technology in a Higher Ed setting. As a part of the course and as the final ‘deliverable’, we collaboratively brought IntIPA to life, an online tool for learning the use of the International Phonetic Alphabet, described above.

- Allison Park - Linguistics of Keysmashing (F19,

W20, S20)

- This independent study series later turned into an Honors Thesis, and is described in detail below in ‘Honors Thesis Advisor’.

Workshop Instructor

- Using machine learning to study human language -

2018 Great Lakes Expo for Experimental and Formal Undergrad Linguistics

(GLEEFUL)

- Designed and taught a three hour mini-course discussing the basics of Machine Learning, and its applications as an evaluative, engineering and experimental tool in the study of corpora, speech, and articulation.

- Praat Beyond the Basics: Measurement, Manipulation,

Automation, and More - 2017 Linguistic Society of America

Annual Meeting Mini-Course

- Designed and taught a four-hour mini-course covering the use of the Praat Phonetics Software Package for more complex tasks like measuring difficult data, sound manipulation, and automated/script-based analysis.

- Using Praat for Linguistic Research - LSA

Linguistic Institute, July 10th and 16th, 2011

- Teaching a 4 hour intensive seminar about using the Praat Phonetics Software package for linguistic measurement, sound modification, and Praat scripting. The workshop was repeated twice during the institute (due to high demand), and once again in the Fall of 2011.

- Expect the Unexpected! - CU Boulder Graduate

Teacher Program, March 11th, August 22nd, and October 7th 2013

- An interactive workshop for the general campus audience focused on preparing college-level teachers for a variety of unexpected, uncomfortable, and unpleasant situations that can arise in the course of conducting a class, with the goal of helping teachers manage chaos with confidence. Repeated three times to meet demand.

- Writing Readably and Reading Writably - For CU

Boulder Graduate Teacher Program, January 9th, 2014

- A one-hour course for graduate students about writing in an academic setting, as well as purposeful research, all with a focus on writing papers that somebody might, someday, actually want to read. - View a Full Recording of the Talk

- LaTeX for Linguists - CU Boulder department of

Linguistics, November 17th 2012, October 22nd 2013

- A 2.5 hour intensive course on the use of the LaTeX typesetting engine for linguistics-related writing and teaching material generation, with special emphasis on bibliography, exam creation, and the proper use of International Phonetic Alphabet (IPA) characters. Repeated by popular demand.

- Putting the LOL in Classroom Learning - For CU

Boulder Graduate Teacher Program, January 21st 2014, September 22nd 2014

- A one hour workshop focused on the skillful application of humor in lectures, exams, and assignments, with emphasis on where to draw the line between “funny” and “offensive”.

- Active Harmer Response - For CU Boulder Graduate

Teacher Program, September 16th 2013

- A one hour workshop, coordinated with the University of Colorado Police discussing how student instructors should handle active harm situations (such as bomb threats or active shooters) with an emphasis on both ensuring one’s personal safety and on the teacher’s role in promoting student safety.

Invited Panelist

- Learn about Hiring from Faculty Hiring Committee

Members - For CIRTL and the CU

Boulder Center for Teaching and Learning, January 25th, 2024

- Served as a panelist discussing the job search process from a faculty perspective, in a 200+ person session aimed at teaching-focused graduate students

- Early Career Faculty Panel - For the CU Boulder

Center for Teaching and Learning, March 7th, 2023

- Served as a panelist discussing the early-career issues facing teaching-track faculty in Academia.

Lead Graduate Teacher for the University of Colorado Department of Linguistics

- Departmental Lead for the development of Graduate

Teaching, CU Boulder Linguistics, Academic year 2012-2014

- Coordinating departmental graduate teaching, consulting for graduate teachers, and conducting video consultations for new and returning graduate teachers. Also organized and lead the Linguistics Department Teaching intensives for Fall and Spring 2012-2014. Originally hired for 2012-2013, but asked to reprise in 2013-2014. Through the University of Colorado Graduate Teacher Program (http://gtp.colorado.edu/).

Graduate Instructor-of-Record, University of Colorado at Boulder

- LING 5030 - Graduate Linguistic Phonetics (Fall

2011)

- Planned and taught a graduate-level course focused on a variety of topics in articulatory, acoustic and laboratory phonetics, for a class of 26 incoming graduate students. Tasked with the preparation of two weekly 1.5 hour lectures and a weekly lab session, and all of the homeworks, labs, tests and other materials necessary for the class to function.

- LING 3100 - Language Sound Systems (Spring 2011,

Spring 2012, Fall 2012)

- Planned and taught a survey of acoustic and articulatory phonetics and phonology to a class of undergraduates. Tasked with preparing and presenting two weekly lectures as well as creating materials for 1 weekly lab, and all of the homework, tests, and other materials necessary for the class to function. Also served as Graduate Teaching Assistant for this course in Spring 2013.

- LING 1020 - Languages of the World (Fall 2014)

- Planned and taught an introductory course in linguistics, focused on examining many of the languages and language families in the world with an eye towards typology, patterns, and understanding the link between how languages accomplish communication and how Language in general works.

Chair and Coordinator, 2007 CU Stampede First Year Leadership Camp

- 2007 CU Stampede First Year Leadership Camp (August

19th-20th 2007)

- Coordinating, planning, teaching and advising at an intensive orientation/conference for a group of 50 incoming Freshmen.

Invited Guest Lecturer

- UC San Diego - LIGN 167 - Deep Learning for Natural Language Processing (Fall 2022)

- University of Michigan - LING 512 - Phonetics (Fall 2015, Fall 2016, Fall 2017)

- University of Michigan - LING 612 - Advanced Phonetics (Spring 2017)

- University of Michigan - LING 209 - Language and Mind (Spring 2015)

- University of Colorado - LING 7030 - Phonetic Theory (Fall 2014)

- University of Colorado - LING 7800/3800 - Computational Lexical Semantics (Spring 2013, Fall 2013)

- University of Colorado - LING 3100 - Language Sound Systems (Spring 2009, Fall 2010, Fall 2011, Spring 2013)

- University of Colorado - LING 2000 - Introduction to Linguistics (Fall 2010, Spring 2011, Fall 2011, Spring 2012, Fall 2012, Spring 2013, Fall 2013)

- University of Colorado - LING 4220 - Language and Mind (April 2008)

- University of Colorado - LING 1000 - Language and US Society (Summer 2011)

Academic Service

University Service at UC San Diego

- Director of Computational Social Sciences (CSS)

Program (June 2022-Present)

- Serving as the faculty lead for the CSS program, overseeing committee assignments, event planning, hiring, curriculum issues, student outcomes, administrative matters, and more.

- As Director, I’ve guided the program through…

- The first years of the M.S. in Computational Social Science program

- The first years of the CSS Ph.D Specialization

- Development of student-facing documentation for many elements of the M.S. program

- The implementation of the UCSD ‘Women in Tech’ talk series hosted by CSS

- Site Coordinator for the National Computational Linguistics

Olympiad (NACLO) (2023-Present)

- Creating a rigorous testing environment for high school students from around Southern California traveling to participate in what is, effectively, a linguistic analysis contest.

- Designated ‘Faculty Tech Mentor’ for Linguistics and Human

Developmental Sciences (Winter 2020 - Spring 2022)

- Aided faculty in Linguistics and HDS with the sudden transition to online teaching during the COVID-19 Pandemic, focusing on training, course design, and sharing resources and technical support.

- UCSD ‘Cyber Champion’ Cybersecurity Faculty

Consultant (2018 - 2023)

- Worked with ITS ‘Cyber Champion’ team to discuss, guide, and help improve messaging surrounding cybersecurity on the UCSD campus until the program’s dissolution in 2023.

Student Advising

Doctoral Dissertation Co-Advisor

- Benjamin Lang (UCSD Linguistics, In Progress, ABD)

- Co-Advising with Marc Garellek

- Mark Simmons (UCSD Linguistics, In Progress)

- Co-Advising with Sharon Rose

Doctoral Dissertation Committee Member

- Yaqian Huang (UCSD Linguistics, 2022)

- Phonetics of Period Doubling

- Yuan Chai (UCSD Linguistics, 2022)

- Phonetics and phonology of checked phonation, syllable, and tone

- Jian Zhu (University of Michigan Linguistics, 2022)

- A computational account of selected patterns of linguistic variation and change

- Michael Obiri-Yeboah (UCSD Linguistics, 2021)

- Phonetics and Phonology of Gua

Honors Thesis Advisor

- Oishani Bandopadhyay (UCSD, in progress)

- Oishani is studying patterns of errors in automatic speech recognition when exposed to dialects and terminology from the Indian subcontinent

- Mia Khattar (UCSD, in progress)

- Mia is working on ‘deepfake’ Text-to-Speech methods and evaluating their confusability with the voices they’re imitating

- Allison Park (UCSD, 2021)

- Allison worked on the linguistics of ‘keysmashing’ (e.g. asdskhjasldkf) and the sociolinguistics and morphophonology of this phenomena

- Gloria Cheung (UCSD, 2021)

- Gloria looked at the pronunciation of written words which could be ambiguously read as English or Spanish (e.g. ‘taleo’) by participants of varied language backgrounds

- Victoria McAllister (UCSD, Graduated 2020)

- Victoria worked on cross-linguistic correlations of Voice Onset time for English and Spanish bilingual speakers across their languages. This thesis, though nearly complete, was not submitted due to complications arising from the COVID-19 Pandemic

Honors Thesis Committee Member

- Kaitlin Lee (UCSD Cognitive Science, in progress)

- Kaitlin is looking at the perception of gender in voices by young children in an experimental, eye-tracking study

CSS Capstone Mentor

- Wayne Lee (AY2023-2024)

- Working with Dr. Amelia Glaser to co-supervise Wayne Lee, who is using Natural Language Processing to classify and examine Ukrainian Poetry

Reviewer for Journals, Grants, and Conferences

Below journals are listed by recency

- Journal of the Acoustical Society of America

- Grant Reviewer for the Dutch Research Council (NWO)

- Frontiers in Communication

- Language

- Laboratory Phonology

- Journal of Phonetics

- Journal of the International Phonetic Association

- Speech Communication

- Phonology

- Clinical Linguistics & Phonetics

- Journal of West African Languages

- Linguistics Vanguard

- Empirical Methods in Natural Language Processing

- Annual Conference on African Linguistics 53

Corpora Maintained

- EnronSent email corpus (Maintainer from

2006-Present)

- Fully described in W. Styler (2011). The EnronSent Corpus. Technical Report 01-2011, University of Colorado at Boulder Institute of Cognitive Science, Boulder, CO, January 2011. - Download as a PDF

- More information available at http://savethevowels.org/enronsent

Competencies

Human languages

- English: Fluent L1 Speaker

- Spanish: Fluent L2 Speaker

- Russian: Extremely rusty, but previously advanced L2

- French: Beginning, Intermediate fluency in reading

- Some working experience with: Afrikaans, Lakota, Zarma, American Sign Language, and Ewe

Technological Competencies

- Programming and Scripting in Python, R, Praat, bash/zsh Shell, LaTeX, HTML/CSS, and MATLAB

- Electromagnetic Articulography using Dual Carstens AG501 EMA systems

- Eye Tracking (using SRResearch EyeLink 1000 and 1000+)

- Statistical Modeling using Linear Mixed Effects Models and Bayesian LMER

- Machine Learning (using SVMs, RandomForest, PCA, Naive Bayes, Perceptrons, (Deep) Neural Networks)

- Digital Audio Recording, Playback, and Signal Processing

- Experimental Programming (MATLAB, PsychoPy, ExperimentBuilder, PsyScope X)

- Using MATLAB for Data processing, capture, and analysis

- Ultrasound for Articulation (Zonare Z.One 3.5, Articulate Assistant Advanced, and other tools)

- Oral/Nasal Airflow Measurement (Glottal Enterprises ‘Dualview’ System, SQLabs EVA2, ‘Earbuds’ method)

- Basic audiological tools (audiometers, calibrated headphones and microphones)

- Advanced proficiency in both macOS and Linux-based Operating Systems

- Moderate Proficiency in Linux-based Server Administration

- General proficiency in Windows 10/11 and FreeBSD

- Basic proficiency in CAD/CAM and 3D Printing using OpenSCAD and FreeCAD

- Official holder of a ‘License to Wug’ for educational purposes from Jean Berko Gleason

Doctoral Dissertation: ‘On the Acoustical and Perceptual Features of Vowel Nasality’

Overview

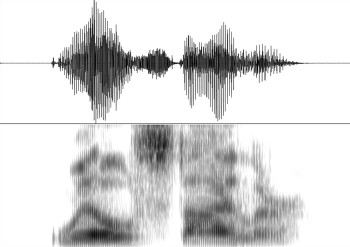

Vowel nasality is, simply put, the difference in the vowel sound between the English words “pat” and “pant”, or between the French “beau” and “bon”. This phenomenon is used in languages around the world, but is relatively poorly understood from an acoustical standpoint, meaning that although we as human listeners can easily hear that a vowel is or isn’t nasalized, it’s quite difficult for us to measure or identify that nasality in a laboratory context.

The goal of my dissertation is to better understand vowel nasality in language by discovering not just what parts of the sound signal change in oral vs. nasal vowels, but which parts of the signal are actually used by listeners to perceive differences in nasality.

I’ve written up a summary of the process, aimed at a more general audience, here, or you can read the abstract below.

Dissertation Abstract

Although much is known about the linguistic function of vowel nasality, either contrastive (as in French) or coarticulatory (as in English), less is known about its perception. This study uses careful examination of production patterns, along with data from both machine learning and human listeners to establish which acoustical features are useful (and used) for identifying vowel nasality.

A corpus of 4,778 oral and nasal or nasalized vowels in English and French was collected, and feature data for 29 potential perceptual features was extracted. A series of Linear Mixed-Effects Regressions showed 7 promising features with large oral-to-nasal feature differences, and highlighted some cross-linguistic differences in the relative importance of these features.

Two machine learning algorithms, Support Vector Machines and RandomForests, were trained on this data to identify features or feature groupings that were most effective at predicting nasality token-by-token in each language. The list of promising features was thus narrowed to four: A1-P0, Vowel Duration, Spectral Tilt, and Formant Frequency/Bandwidth.

These four features were manipulated in vowels in oral and nasal contexts in English, adding nasal features to oral vowels and reducing nasal features in nasalized vowels, in an attempt to influence oral/nasal classification. These stimuli were presented to L1 English listeners in a lexical choice task with phoneme masking, measuring oral/nasal classification accuracy and reaction time. Only modifications to vowel formant structure caused any perceptual change for listeners, resulting in increased reaction times, as well as increased oral/nasal confusion in the oral-to-nasal (feature addition) stimuli. Classification of already-nasal vowels was not affected by any modifications, suggesting a perceptual role for other acoustical characteristics alongside nasality-specific cues. A Support Vector Machine trained on the same stimuli showed a similar pattern of sensitivity to the experimental modifications.

Thus, based on both the machine learning and human perception results, formant structure, particularly F1 bandwidth, appears to be the primary cue to the perception of nasality in English. This close relationship of nasal- and oral-cavity derived acoustical cues leads to a strong perceptual role for both the oral and nasal aspects of nasal vowels.

Dissertation Details

- Title: “On the Acoustical and Perceptual Features of Vowel Nasality”

- Advisor: Dr. Rebecca Scarborough

- Defense Date: March 18th, 2015

- Download: Download a PDF Copy (3.4 MB) - BibTeX Citation

Past Research Positions

University of Michigan, Department of Linguistics

- Post-Doctoral Research Fellow for Jelena Krivokapic

(Fall 2016 - Summer 2018)

- A Post-Doctoral Fellowship, working with Jelena Krivokapic to bootstrap and manage the University of Michigan’s EMA (Electromagnetic Articulography) lab, using two Carstens AG501 EMA units to conduct research on articulation, prosody, and gesture.

- Major responsibilities included operation and maintenance of EMA systems, writing software and scripts for data capture and processing, statistical analysis using R and MATLAB, machine learning for data prediction, as well as the day-to-day management and equipment maintenance for the department’s Sound Lab.

- Post-Doctoral Research Fellow for Patrice Speeter Beddor and

Andries Coetzee (Spring 2015-Summer 2018)

- A Post-Doctoral Fellowship, working with Patrice Speeter Beddor and Andries Coetzee on data collection, analysis, and modeling for a grant from the National Science Foundation, titled The time course of speech perception and production in individual language users. This involved the implementation of several major experiments utilizing an SR Research Eyelink 1000+ Eye-Tracking System, a Zonare Z.One Ultrasound Machine, optical position tracking, and acoustic recording, as well as the purchase, testing, and deployment of a new nasal airflow capture system.

- Major responsibilities included data collection, data processing script creation, statistical analysis using R and machine learning methods, as well as the day-to-day management and equipment maintenance for the department’s Sound Lab.

University of Colorado at Boulder, Department of Linguistics

- Graduate Research Assistant to Dr. Rebecca

Scarborough (Spring 2008-Spring 2015)

- Assisted Dr. Scarborough with phonetic data collection and analysis, along with the design of new computational methods of data analysis and measurement. Particular emphasis on the development of new tools for accurately and quickly analyzing formant and spectral measures in large corpora of data, as well as the computational manipulation of nasality.

- Working primarily with Praat, Python, SPSS, PsyScope X, PsychoPy and R.

- Graduate Research Assistant and Annotation Supervisor for

Dr. Martha Palmer (Spring 2008-Fall 2014)

- Assisting and directing various computer language processing and corpus annotation projects. Main projects include the development of a schema for the coding and annotation of temporal relations within medical records (the THYME project) and in the general domain (RED for the DEFT project) and the implementation of the Unified Medical Language System (UMLS) to mark the information desired in clinical questions (MiPACQ).

- This position involved training and supervising a team of 10+ annotators as they progressed through a series of annotation tasks using Protege, Knowtator, Jubilee, and other tools, and also led to a role in the development of Anafora, a lightweight annotation tool to replace Protege/Knowtator. This annotation and schema development work has since given rise to two published papers and several awarded grants. This involved collaboration with the Mayo Clinic and Harvard Children’s Medical Clinic.

Volunteer Work

Work for the CU Boulder Residence Hall Association

Student Leadership Mentor, CU Stampede First Year Leadership Camp

- CU Stampede First Year Leadership Camp (2008-2012)

- Training a group of 5-10 first year students to get involved on campus and make an effective transition into the new environment at CU during a small training camp at the start of Fall Semester.

Invited Program Presenter, CU Stampede First Year Leadership Camp

- “Language is magic” (2013-2014)

- A presentation on the beauty, magic, and joy to be found in languages of the world, with emphasis on the improbable nature of human communication.

- “Your Writing System is lying to you” (2007-2012)

- An accessible introduction to articulatory phonetics and phonology using examples from English speech, framed as an exploration of the role of passion in leadership.

- “The View from the other side of the Desk”

(2011-2014)

- A short seminar on the lessons for students in a University setting which come from thinking like the instructor. (2011-2014)

Winner, National Residence Hall Honorary CU Student of the Month

- Awarded in September 2006 for exceptional work in academics and in Residence Hall Life, principally as ‘Student Outreach Coordinator’

Version History

- Oct. 2023: Changed format to Markdown-based approach for ease of maintenance, improved some descriptions, added missing elements and PDF links